Taken by the flood

An impressive onrush of current

Last January, I wrote about how I keep track of ideas for topics to write about. I said, when I have an idea, I create a “ticket” a bit like a “bug ticket” in a software development process, to keep track of it. What I didn’t say, though, is something that applies to writing ideas just as much as to bugs: it’s important to be descriptive. Things you think, now, are always going to be seared in your mind, will be over and gone in a few hours. Unfortunately, when I create a “writing ticket”, I will often only write down a few words and rely on my memory to know what the post was actually going to be about. When I come back to it later: baffled.

Take, for example, an idea I also wrote down back in January, a few weeks before writing that post. “Post about the fast-flowing waters of Stockholm.” Post what about them, exactly? I have no idea.

It’s true that the centre of Stockholm does have impressive fast-flowing water, a rushing, churning torrent that pours constantly in two streams, either side of Helgeandsholmen, the island taken up almost entirely by the Swedish parliament building. When I visited Stockholm, I walked through the old town and down to visit the museum of medieval Stockholm which lies underground, beneath the gardens in front of the parliament. When I reached the narrow channel of water separating the parliament building from Stockholm Palace, I was shocked and amazed by the speed and power of the water. It flowed from east to west in a solid, smooth-surfaced grey-green mass, as if it had an enormous weight behind it. It felt dangerous, unstoppable, and irresistable.

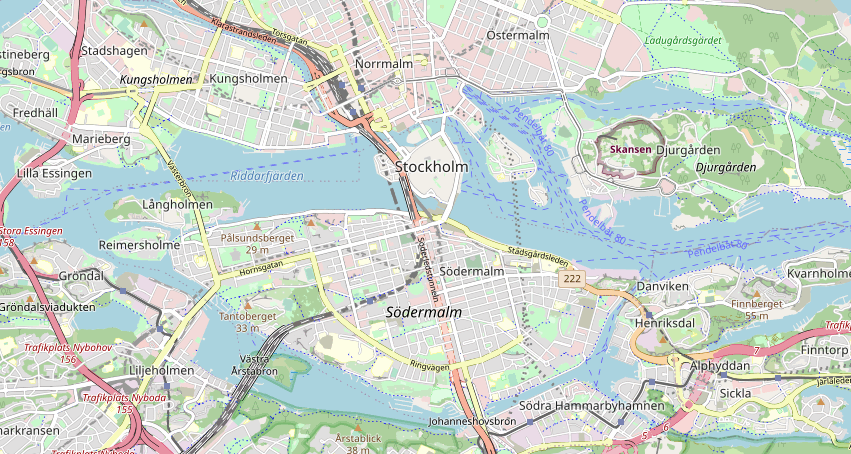

I already knew that Stockholm, and the Baltic in general, doesn’t really believe very much in having tides. Stockholm’s harbour is technically seawater, but there are many kilometres of archipelago between the city and the open sea, and to get to open ocean you have to go all the way around past Copenhagen and the tip of Jutland. Because of that, the Baltic isn’t particularly salty and doesn’t have very dramatic tides. This couldn’t, then, be a tidal flow. It took maps to show what it was.

The above extract from OpenStreetMap is © OpenStreetMap contributors and is licensed as CC BY-SA.

You can see how Stockholm is built around a relatively narrow point in the archipelago. What you can’t really see at this scale, though, is that all the channels of water to the right of the city centre are the main archipelago, leading out to see, but the channels of water to the left of the city centre are all part of Mälaren, a freshwater lake some 120km long and with an area of over a thousand square kilometres. Most significantly, its average altitude is about 70cm above sea level. That slight-sounding difference adds up to roughly 800 billion litres of water above sea level, all trying to flow downhill and restricted to the two channels, Norrström and Stallkanalen, the second narrow, the first wide, around Helgeandsholmen. Norrström, in particular, is a constant rush of choppy white water.

Why only those two channels? Although you can’t really see from the map, the other paths of blue that look like channels of open water, around Stadsholmen and Södermalm, were long ago canalised, blocked off with large locks. The two northernmost channels are the only free-flowing ones.

So, you might be wondering, what was the point of this post? Was there going to be some deeper meaning I had uncovered, some great symbolism or relevance to my everyday life? Frankly, it’s entirely possible, but I have no memory at all of what I was thinking when I wrote that note originally.

Right now, it’s tempting to say that sometimes, instead of trying to understand life and everything around you, instead of trying to predict what will happen and what the best course of action to take will be, it’s better just to sit back and ride the rollercoaster, and tht the sight of the massive onrush of water through the centre of Stockholm made me think of just jumping in a small boat and shooting the rapids without worrying about what happens next. Equally, I might have been thinking of how you can find such a powerful force of nature right in the heart of somewhere as civilised as a modern European capital city. For that matter, it could have been a contrast between how placid, still and mirrorlike the waters of Mälaren a few hundred metres away outside Stockholm City Hall are, compared with the loud, rushing, foaming rapids we’re talking about here.

To be honest, I really don’t know. I know which of those thoughts seems most apt right now, but it might not even have occurred to me in January. At least, now I’ve written this, I can close that ticket happy in the knowledge that something fitting, in one sense, has been written under that heading. Now I’ve done that, I can flow onwards to the sea.

Home

Home